Beware the bad survey: Science literacy isn’t as bad as the statistics make it look By Cassie Barton

Read the catchy one-line statistics that circulate in the headlines and on social media and you’d be forgiven for thinking that public understanding of science is in a sorry state. A few months back, we heard that 80% of Americans want a warning label on any food that “contains DNA”. Earlier on, a poll found that 65% endorse the good old you-only-use-one-tenth-of-your-brain myth. And here in the UK, teachers seem to believe all kinds of brain myths, including a substantial minority (29%) who think that failing to drink enough water will literally shrink your brain.

Typically, these statistics have a short life cycle. They get used by journalists for amusing articles, grumbled about by science-y people on Twitter, and fade away before being resurrected, half-remembered, at dinner parties. Rarely does anyone take a critical look at where these statistics come from and whether they’re worth listening to. Which is ironic, because anyone who makes it their mission to debunk bad science needs to be just as wary of the bad survey.

When the DNA-labelling statistic came out, Ben Lillie wrote an excellent blog post pointing out that it was probably an artefact of poor survey design, since plenty of other studies suggest that most people know perfectly well what DNA is. He was dead right – polling is an inexact science at best, and if a finding looks too good (or bad) to be true, it usually is.

Here are some of the major hurdles that most of these studies seem to trip over.

Non-attitudes

It’s no secret that people say stuff on surveys that they don’t really believe. Social scientists even have a word for what you end up with: a ‘non-attitude’.

Let’s take the DNA-labelling finding as an example of how non-attitudes come about. It’s from a survey about attitudes to food issues, part of a question where people are asked whether they support a long list of policies. Crucially, “mandatory labels on foods containing DNA” is the only silly policy on the list – the others are plausible ideas, like taxing sugary foods and labelling what country meat comes from.

Like most people, I probably have more fun and important things to do than answer a long survey on food policy. Confronted with a long list of agree/disagree questions, I might start skim-reading. Maybe I’ll tick ‘yes’ to everything (people are more likely to unthinkingly agree than unthinkingly disagree on surveys – it’s known as acquiescence bias). Maybe I’ll just agree with all the policies about food labelling, since it’s something I support on the whole. The chances that I’ll spend more than a few seconds weighing up what the question means and what I think about it is fairly low.

Even leaving acquiescence bias aside, any survey of attitudes is limited in what it can tell us about what people really think. Think back to the UK teachers’ survey. It might show that a lot of teachers ‘agree’ with a survey question about dehydration making your brain shrink. But it’s entirely possible that they hadn’t come across the idea before they saw it on the survey – which means up until that point, it was hardly a problem for their teaching practice. Survey designers have to be careful not to suggest attitudes that never would have occurred to people ordinarily.

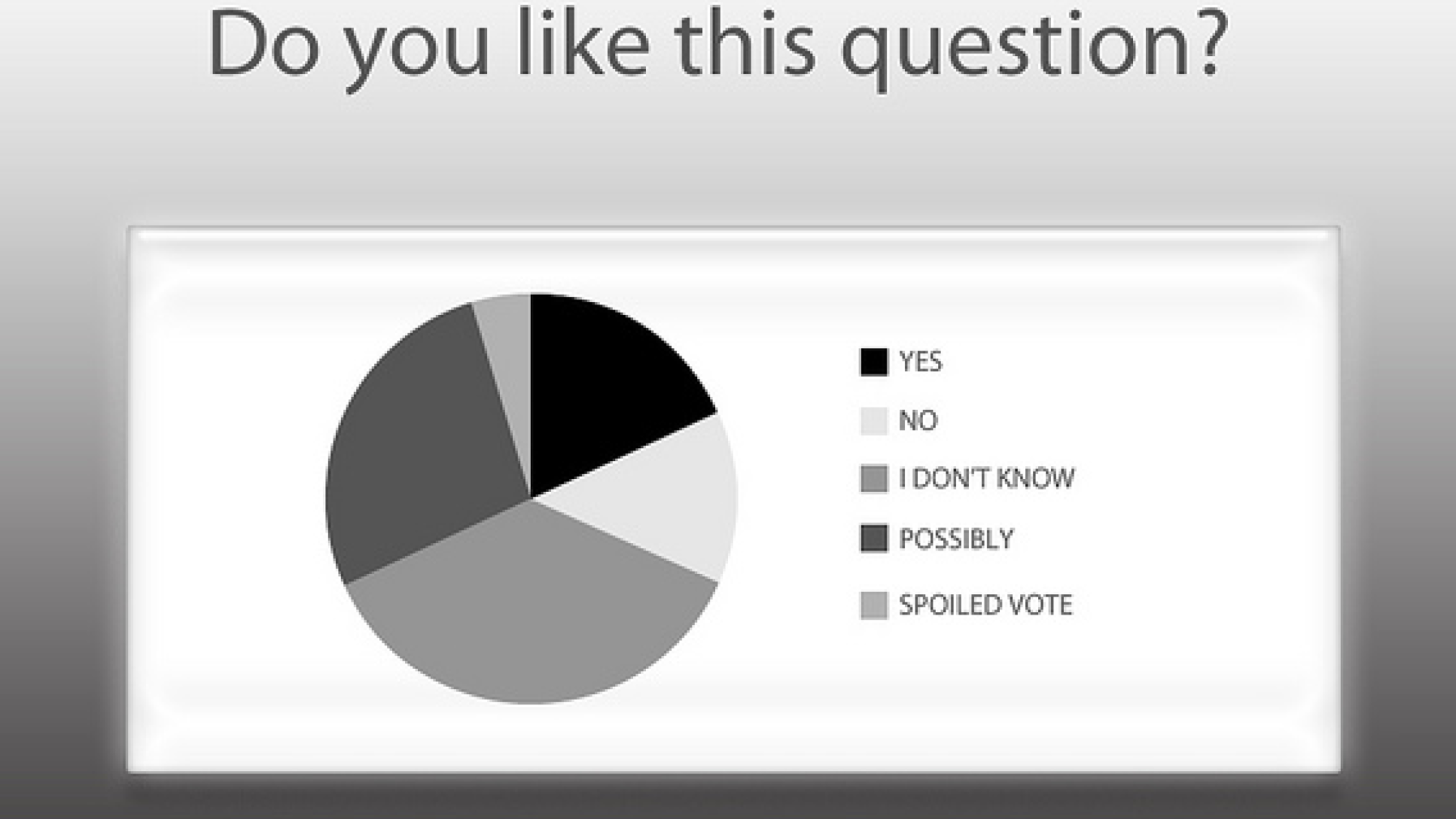

One thing that can help – though it’s by no means a catch-all solution – is to let people who don’t know the answer say so. A surprising amount of surveys (including the food survey above) force people to choose between ‘agree’ or ‘disagree’. And that means that people who genuinely don’t know (or don’t care) have to misrepresent their opinions. Then again, ‘Majority of Americans noncommittal about DNA’ doesn’t make such a catchy headline, does it?

Question wording

Let’s say people are reading the questions closely and really care about the issues they’re being surveyed on. That still doesn’t guarantee a clear-cut finding – the way questions are worded can have a big impact on responses.

Here’s another finding from the teachers’ survey: 57% think children are less attentive after consuming sugary snacks. The original researchers classed this as a ‘neuro-myth’ because there isn’t much scientific evidence for sugar triggering hyperactivity or inattention. However, there seems to be a gap between what the researchers think the question means and what teachers think it means. Neuroscientists may deal in causal pathways between body chemistry and the specific, measurable concept of ‘attention’. Teachers don’t care so much about whether sugar itself is the problem – it could be a placebo effect, or a different chemical. And they have a broader definition of ‘attention’ – for them, it’s whatever happens when kids stop giggling and face the front.

Interestingly, the research paper that sparked all the fuss in the UK actually discusses this point in detail. It’s publicly accessible on Nature and well worth a read.

Anyway, the lesson here is not to use jargon, particularly not jargon that has a different meaning for non-scientists. If people don’t know what the question means, they won’t know how to answer it properly.

Sampling

Sampling procedure is incredibly important if you want statistics that say something about everyone, not just the cluster of people who answered the survey. Say we’re told that 65% of survey respondents think we only use one-tenth of our brains. Does that mean that if you asked all 320 million adults in the United States the same question, 208 million of them would definitely say the same thing?

Of course not. But if a sampling is well-designed, we can say that it’s more or less true. Good surveys use a large, random sample, where everyone in the population of interest (United States residents, in this case) has the same chance of being selected. Of course, this never works out perfectly in real life, but there are strategies that bring you closer to ‘good enough’.

None of the studies here attempted random sampling. Yes, 65% of survey respondents think that only one-tenth of their brain is switched on – but a look at the press release shows that the respondents came from an online panel. They participated if they happened to be online, see the link and feel interested – in the topic, or in the compensation that was probably offered. There’s no way of knowing how weird these people were; how well their responses generalise to the whole population.

Does it even matter?

All of these statistics are based on very specific questions. And that’s for a good reason – it’s impossible to audit everything the public does and doesn’t know about science, so a few key questions are chosen as indicators of how the land lies. They’re actually much more useful in bulk, being tested repeatedly over time, than they are as one-offs.

Filter through the media, though, and you often end up with people getting far too hung up on the little fragments of knowledge being tested. By themselves, they don’t matter. Remember the teachers who didn’t care about the specifics of ‘sugar’ and ‘attention’? People don’t always need scientific facts as they go about their lives and jobs. Most of what they do is based on hard-earned common sense. Mock that common sense and you risk damaging the public’s relationship with science long-term.

Using surveys well

I’m not trying to suggest that this kind of survey is useless – just that their findings should be taken with a pinch of salt and a careful look at their methodology. Treat new survey data the way you’d treat any new scientific finding: try and find the original source and evaluate it. Did they do everything they could to avoid bias? Is there anything you would’ve done differently?

Once you have a good finding, you have a choice about how to use it. Do you want to make fun of people who are less educated than you and reinforce the stereotypes of the dumb layman and sneering scientist? Or do you want to try and learn something about the people you’re talking to and become a better science communicator?

Survey data can be a great resource. These days, a lot of research has moved past assessing what people know about science – we’re interested in what people think about it. In the US, the General Social Survey asks how much people trust and admire scientists (good summary stats here), while the Pew Research Center focuses on people’s attitudes to key issues like genetic engineering and climate change. In the UK, surveys like Public Attitudes to Science and the Wellcome Trust Monitor have similar goals. Demographic data is collected as a matter of course, which means this kind of knowledge can help science communicators really understand their audience – not just how well-educated they are, but how they feel about scientists and scientific ideas.

For all the mockery and misunderstanding that goes on, findings are surprisingly positive. Here in the UK, a good 72% of people agree that “it is important to know about science in my daily life”. Perhaps it won’t be so hard to build understanding between scientists and the public after all.

Cassie Barton is an MSc student in Social Research Methods at the University of Surrey, UK. She writes about science and has research interests in science communication and engagement. Twitter: @cassier_b

Cassie Barton is an MSc student in Social Research Methods at the University of Surrey, UK. She writes about science and has research interests in science communication and engagement. Twitter: @cassier_b

[…] Be mindful the poor study: Scientific research proficiency isn'' t as poor as the studies produce that … Obviously not. Yet if a testing is actually well-designed, our team can point out that this'' s virtually true. Really good polls use a sizable, arbitrary sampling, where every person in the population of interest (Usa citizens, within this instance) has the same odds of being actually … Learn more on PLoS Blog sites (blog) […]

[…] Source: Beware the bad survey: Science literacy isn’t as bad as the statistics make it look | PLOS SciComm […]

[…] essay originally appeared during PLOS Blogs and is republished here underneath a artistic commons […]

[…] 10) Beware the Bad Survey: Science Literacy Isn’t as Bad as the Statistics Make it Look, PLoS Blo… […]

The original quote about the brain was “We use, at most, only 10% of the capability of our brains.” This was reasoned from the fact that some savants can remember many of the details of every day of their lives, others can see the pages in enormous libraries in their minds, others can memorize enormous volumes of numbers and calculate faster than you can use a hand calculator, and “ordinary” people who can speak up to 20 languages fluently. The quote was never meant to say 90% of your brain was “switched off”, but even if it was, we understand memory storage as being holographic now, so the activity of neurons would be diffuse anyway.

[…] Beware the bad survey: Science literacy isn’t as bad as the statistics make it look The Brighter Side of Rabies Cuba Has a Lung Cancer Vaccine—And America Wants It The Capstone (Emeritus) Award is Already in Pending Legislation A Darwin Finch, Crucial to Idea of Evolution, Fights for Survival […]

http://solemar.coop.br/uruzz01/coach-36446-89.htmlコーチ 時計 ピンクコーチ 時計 ペア,コーチジャケット 無地,ニナリッチ 財布 ピンク

コーチ 時計 修理コーチ 時計 ベルト交換,コーチジャケット 無地,ニナリッチ 財布 ピンク http://utahtriathlonclub.org/uruzz01/coach-36446-93.html

http://rinkbuild.com/prostomag/1640a-10154-hamilton37.htmlハミルトン ジャズマスター オートクロノ,ハミルトン島,ニナリッチ 香水 マカロン

ハミルトン レディース 人気,ハミルトン 腕時計 メンズ クオーツ,ニナリッチ 財布 年齢 http://hobbycentre.ae/prostomag/115e9-90153-hamilton24.html

http://www.bluemoonlavender.com/prostomag/2757e-80158-hamilton80.htmlハミルトン 腕時計 修理 大阪,ハミルトン レディース 自動巻き,ニナリッチ 香水 アーモンド

ハミルトン 腕時計 アードモア,ハミルトン レディース,ニナリッチ 財布 ピンク http://fetedupain.com/prostomag/0eed8-80152-hamilton20.html

http://www.homageistanbul.com/prostomag/079a6-50150-hamilton7.htmlハミルトン嬉野,ジョシュハミルトン,ニナリッチ 香水 フルールドフルール

ハミルトン 腕時計 メンズ スケルトン,ハミルトン カーキ フィールド,ニナリッチ 財布 ピンク http://www.culturestarved.com/prostomag/2c39f-00159-hamilton87.html

Scientific literacy tests are flawed in that most of them don’t even question people on the core of science…the scientific (empirical) method, and its limitations. Asking people to regurgitate a bunch of pop culture “facts” based on current scientific theories is not the same as understanding how scientific principles are arrived at through proper experimental methodology. I’d be surprised if 1% of the population could explain the scientific method, its formalities, what a testable/falsifiable hypothesis is, the limitations of various data types, and then critically apply it to the various junk-science studies that are constantly being presented in the media as “facts” or “proof”.