Openly Streamlining Peer Review

We are delighted to host our first guest post on Biologue by James Rosindell and William D. Pearse from Silwood Park, Imperial College London. They share their view on how we might improve peer review.

Elsevier recently announced a peer review challenge. Open to all, the competition invites contestants to submit a 600-word idea of how to improve the peer review system. Winning entries are soon to be announced on Elsevier’s website. Unfortunately, the terms and conditions of entry involve making your idea the property of Elsevier, so we fear that even those who don’t win will no longer have the right to implement their idea or make it public. Since having our idea known is more important to us than winning a prize we decided to make our entry publically available here rather than enter the challenge. In this way, we hope that our ideas will contribute to the debate on this subject and to the implementation of some of our suggestions.

Computing and the Internet have revolutionised access to scientific work over the last 20 years, while the peer review system has remained relatively unchanged; in essence, it is optimised for a pre-Internet world despite incorporating obvious features such as online submission. It would be remarkable if the existing peer review system remained the optimal one for the 21st century now that swift, international, free, paperless exchange of information makes new ideas easier to disseminate, free of barriers such as the cost of printing and distribution. There may be resistance to moving away from a ”local optimum” – a state where any small change appears to be a step in the wrong direction but the correct large change may yield huge improvements. There may also be questions over what the new system should be, but we are glad to see that Elsevier acknowledges the need to consider alternatives through their challenge. We do not pretend that our idea is a complete solution, but we hope to contribute to the necessary discussion over what peer review should be. We believe that an effective and fair reviewing system is needed to support a rapidly growing scientific community and body of knowledge.

Our Entry

Peer review is an essential part of science, but there are problems with the current system. Despite considerable effort on the part of reviewers and editors it remains difficult to obtain high quality, thoughtful and unbiased reviews, and reviewers are not sufficiently rewarded for their efforts. The process also takes a lot of time for editors, reviewers and authors.

We believe that these problems are inter-related. A fairly rewarded reviewer should do a better job, and thus make the editors’ task easier. The publishing process is already expensive, and scientists value their reputations, so rewarding reviewers with scientific status rather than with money seems the natural solution to us. One way would be to make reviews signed, published online, and citable. This in turn would make the process less frustrating for authors: a scientific discussion is useful and exciting, but a debate with an anonymous opponent who has nothing to gain or lose apart from time is not.

We propose a new system for peer review. Submitted manuscripts are made immediately available online.

Commissioned and/or voluntary reviews would appear online shortly afterwards. The agreement or disagreement of other interested scientists and reviewers are automatically tallied, so editors have a survey of general opinion, as well as full reviews, to inform their decisions. Far from being unrealistic, a similar system already exists on the web: Reddit, which is used to rank webpages based on public opinion, and has nearly 100 million views per day (alexa.com). Reddit incorporates useful features such as karma, which indicates the overall reception of an individual’s posts.

In our proposed system, users would log into the system and get the opportunity to vote once for each article (or reviewers comment), thereby moving it up or down the rankings. Access could be restricted to those within the academic world or even within an appropriate discipline, so only appropriately qualified individuals could influence the rankings. The publication models of established journals would be preserved, as full publication of an article can still take place once the journal is satisfied with the scientific community’s reception of the work. Specialists would have immediate and free access to the cutting edge of science, while the wider community would still benefit from the filtering function of peer review. User biases regarding author identity or subject area could be automatically detected, and users can then be given the chance to defend their views openly. Conflicts of interest could be declared and taken into account when calculating rankings. Complete openness would mean no one need fear retribution for stating their scientific position. Unfair treatment would be clear for all to see, and not hidden behind the walls of anonymity created by the current system. If a journal rejects a paper, it would remain online along with its reviews and its ranking. Authors could submit a revision to a different journal along with a link to the earlier version, so reviewers comments and users opinions are retained for further use, significantly increasing the system’s efficiency.

Ultimately, it may be possible for journals to approach authors for their unpublished manuscripts based on the online reviews and rankings. Indeed, since it is possible to cite articles in online repositories, journals agreeing to publish these works could immediately claim all pre-existing citations to contribute to their impact factor. A recent simulation suggests that a system where editors bid for articles increases everyone’s number of publications and thus speeds the advancement of science (Allesina, 2009). Many of these changes could be introduced incrementally. Publishing reviews and using the existing (open-source) Reddit code to rank scientific work would be straightforward, and could yield significant benefits to everyone involved in publishing science.

References: Allesina, S. (2009) arXiv:0911.0344v1

This post was originally submitted to PLOS Biology before the submission deadline for Elsevier’s competition. It has not materially changed since that date.

The authors declare they have no competing interests.

James and Will, I applaud your efforts! I’m going to play the contrarian and say that it’s hard to put the disintermediation genie back in the bottle. Accelerating the pace of peer review, making it open and de-anonymized, and instituting a system of microcredits and upvotes that empower scientists over gatekeepers are all superb ideas around which the scientific community will hopefully coalesce.

But why does this have to happen in the context of a journal?

To me, Open Science and self publishing go hand in hand, which is why I re-imagined the laboratory website as a self publishing platform (please see above link). Before creating my new site, over a decade of admittedly ad hoc, two-way email correspondences with hundreds of my academic peers has demonstrated that it’s possible to solicit and cultivate scientific colloquy that approximates and often rivals the quality of discourse in anonymous official peer review.

While the reddit ranking algorithm might be a good place to start, it’s fairly specialized for its purpose and may not be appropriate to rank contributions to peer review. For example, the story algorithm highly favors new submissions to while the comment system does not.

There’s a lot of good writing on the topic:

How Reddit ranking algorithms work

http://amix.dk/blog/post/19588

reddit’s new comment sorting system

http://blog.reddit.com/2009/10/reddits-new-comment-sorting-system.html

Full disclosure: PLOS employee.

A good point; we’re not necessarily saying we’d weight everything by time, just that we’d allow people to up/down vote something. We wouldn’t want Darwin losing karma because he published a while ago :p

A good point! I can’t speak for James, but my only problem with lab websites is I think categorising by lab makes it hard to find material. Labs work on lots of different things, and I need to know the name of a lab in order to check its site.

Journals are probably not the best solution to this problem, but at least they have informative titles that help me decide what RSS feeds to subscribe to. Perhaps a big, centralised catalogue with tagged articles would be the way forward, a little like arXiv.

Hi James and William, Your idea is very interesting indeed. You referred to Elsevier’s Peer Review Challenge for which I am responsible, and our ‘’terms and conditions’’. Let me say that with regards to those entrants who will not be chosen as a winner, based on feedback that we received during the challenge, their ideas can be further developed (beyond the idea as they presented in their entry) as they see fit and we hope in cooperation with Elsevier. Indeed after the winners are announced we will ask all entrants if they would like us to make their idea fully public. This way the community can see the wealth of new peer review ideas – stimulating discussion and piloting new ways of peer review by anyone.

You make a good point Ethan and your website is very nice. I agree with Will’s response that it might be hard to find new research material if it only appeared on lab websites whereas a centralized site does not have this problem. Perhaps a way to reconcile these two concepts would be to have central repositories containing a short summary and a link to people’s lab websites for the complete work. An important issue that would need to be solved as part of this is citations; whilst credit in the academic community continues to be citation based scientists will not be willing to publish their work in forms that do not accrue genuine citations. I think that the system of journals will remain for a while yet, but they will inevitably move away from print copies and other outdated practices as the digital age continues to enhance our methods of information exchange.

Clare, Thank you for your comment, I am glad that you liked our idea. I think it is great that Elsevier has taken steps to enable all entries to be made public and developed further. It is perhaps unfortunate that this change could not have been reflected in the competition terms and conditions earlier on. In your E-mail to me shortly before the challenge deadline, you only said that you would take a closer look at the issue when structuring *future* challenges. The terms and conditions are still online at http://www.peerreviewfuture.com/?page_id=14 and clearly state that “All entries become the exclusive property of Sponsor [Elsevier]”. With hindsight and with the changes you have since promised to make, I now see that we could have safely entered the competition. Nevertheless, at the time of the closing date it unfortunately appeared that not entering was the only way to be guaranteed a voice. Of course, I still hope that publishers will implement components of our idea in the future and I am delighted to see a variety of further ideas appearing as finalists to the competition.

Hi James and Will,

Brilliant idea! At what stage of development are you guys with this? Does Elsevier completely take ownership of this idea now?

I was working with a team in a Spanish conference on a similar open publication model. I’d be quite interested to discuss this with you guys and see if this can be incorporated in an existing prototype you guys are working on.

Drop me an email, and we can discuss this further.

Cheers

This is a very interesting post, and a thoughtful solution. The only point I’d quibble with is: “Complete openness would mean no one need fear retribution for stating their scientific position.”

As an early career scientist retribution does not only come in the peer review process, but also in funding reviews and job applications. Early on I had decided to sign my reviews, but I have had situations where I felt that signing my review could lead to problems in future hiring or funding opportunities, where the reviewee might become the reviewer and the openness of that review might not be assured.

I suppose my point is that not all peer review occurs in journals, and as such, anonymity might still be important, especially for people in early career stages.

Ipshita, thanks for your post. The project you are working on sounds most interesting and I would be glad to discuss it further with you over E-mail. As far as our idea is concerned, it is just an idea at this stage. We have not developed any code though we have identified the preexisting Reddit code as being potentially suitable for reuse. Elsevier have no rights whatsoever to our idea – that was the whole point of our posting here instead of entering the competition.

You are right Simon, I completely understand this concern. Perhaps the way funding distribution is decided should also be given a rethink? Apart from the issues that you raised above the amount of time that is spent in competing for funding (and deciding who gets it) is another potential worry. As the scientific community increases in size and funding gets tighter this situation may get worse. I have wondered for a while whether funding should perhaps be given based on already published papers rather than based on future promises. As far as hiring is concerned, it would not be nice to work with people who would hold your signed reviews against you so I think funding is the main reason why not to sign reviews as a junior researcher.

You’ve both made some good points, but I’d like to add that signed reviews from more senior scientists might help the situation somewhat. That way you’d know at the application stage what someone’s views about your work were, and might even help institutions when putting together selection panels.

[…] evidently has a competition out for new suggestsions for Peer Review. I found out about it on this PLOSone article (in my twitter stream via Jacqueline Gill), which is only incidental, as you can see (once you read […]

I like these proposed changes. There just was one phrase that scared me a bit: “Commissioned and/or voluntary reviews would appear online shortly afterwards” – the “or” in this! I think specific experts should still be asked to review a manuscript. If you leave it to volunteers you I fear you would get a disproportionate number of reviews done by procrastinating PhD students, opinionated retired academics, and the odd not-so-strong academic who has plenty of time, and a tendency to trample other people down in order to feel better about themselves…

Fair point, Margot. I had heard a system proposed where all reviews were voluntary, but I think commissions are really needed still for the reasons you put forward.

First off I applaud your initiative and all attempts at redesigning a problematic system.

Until the problems of promotion / tenure / citation etc are solved (and they are integral to the publishing problem), I’d like to remark that our many of our problems with the peer review system lie not with the reviewers, but with the editors (and I’m saying this as an Associate Editor, a member of several editorial boards, and as an author of a recently-rejected manuscript!). Many editors have been reduced (or reduced themselves) to bureaucrats who count review scores rather than intelligently reading and critiquing the manuscripts and reviews they receive. If editors would do a better job at reviewing reviews, then the whole process would work better. As there are fewer editors than reviewers, this is probably where efforts should go. Perhaps the answer is some type editor education coupled with of reward (monetary? reputation?). Or maybe an on-line repository for outlandish reviews as negative reinforcement for journals? Or simply, as a friend recently said, “The answer is competent editors with high ethical standards who can make an educated judgement call”. Is that too much to ask for?

[…] be announced in mid-august. Reffering to this challenge James Rosindell and Will Pearse presented a peer review model on PLOS Biology that very much resembles open peer review proceedings (OPR) and added some interesting thoughts. I […]

[…] — Hypothes.is (@hypothes_is) August 9, 2012 “We propose a new system for peer review. Submitted manuscripts are made immediately available online.” blogs.plos.org/biologue/2012/… […]

Excellent plan, and one that I would thoroughly support. It’s interesting that the system being implemented by PeerJ is very similar to this: one path that authors can take is to post their submission on the preprint server immediately, and allow it to be “promoted” to the actual journal as and when it passes peer review.

By coincidence, I’ve been writing about peer-review over on my own blog in the last week. You may be interested in these three posts:

Where peer-review went wrong

Some more of peer-review’s greatest mistakes

What is this peer-review process anyway?

(Let’s hope this comment doesn’t get spammed for including too many links!)

[…] — Hypothes.is (@hypothes_is) August 9, 2012 “We propose a new system for peer review. Submitted manuscripts are made immediately available online.” blogs.plos.org/biologue/2012/… […]

‘@Margot

Don´t get me wrong, but your differentiation into “experts and the stupid” (bringing it to the point) seems to me kinda short-sighted. Actually I feel slightly offended, as I would probably be in the procrastinating PhD student category, following your terminology.

Let´s face the basic problem in this debate: There is no objective answer to the question who is and expert and who not. And because it is like this we installed a system of gate-keepers to regulate who get´s which title and who not, who is called into which commisions, who will get which chair and so on. That is basically peer-reviewing in a different way and on different levels.

Having the missing objectivity in mind, this doesn´t mean everyone makes equally intelligent statements. However, – the other way round – being called an expert doesn´t make your statements necessarily more intelligent than the ones of “laymen”. Actually, in my young carreer as a researcher, I already met more than a handful of “experts” that didn´t exactly match my ideas of being an expert or just seemed to me not very intelligent at all. Anyway, I would never make a general statement out of it or pigeon-hole them as “smug oportunist” or any other category you mentioned. What else is this than trampling down someones reputation by using an argumentum ad verecundiam?

The point is, not only “esoteric crackpots” are developing their knowledge and knowledge standards in certain peer-groups and discourses, but all of us. Our reception of who is capable of examining or reviewing other people strongly depends on the trust we give to a certain person or institution and if you are not trusting the work of a procrastinating PhD student, then, I think, he or she shouldn´t appear in the vote when it comes to you – anyway, another person could be interested in the vote and want it to be counted. On my blog I made a proposal how to handle this problem.

Frederik,

I think you missed the word “specific”. Everybody benefits from manuscripts being reviewed by the most topical specialist, and it shouldn’t be left to those with most time, or those who erroneously think they have the most time. How these overlap is something I didn’t reflect on.

So you might be one of either of the two latter categories? Congratulations! But do you think you should do A) your proportional share of peer review (however that is quantified) or B) more than that? If A: fine, we agree. No need to go call yourself stupid. If B: please elaborate.

And of course, editors aren’t perfect in judging which scientist is the best choice for a certain review, but that is an ertirely different matter.

Margot,

I don´t mind if a person is addressed specifically to review a paper. I just don´t find it necessary as a counterweight to “the wrong reviewers”, as you suggested. And I don´t really see the difference between “specific experts” and volunteers. The reviewers of the journals I worked for volunteered as well – in the end they are not paid – and that is the common case.

Frederik,

I don’t know where you get all these normative descriptions from. “The wrong reviewers”? And I also never suggested reviewers should be paid. I just think reviewers should be matched to the manuscript based on expertise. If you just throw the manuscript out there, and let it be reviewed by whoever feels like reviewing it, you run the risk that those who do will be a population biased by (perceived) availability of time. Is it clear now?

[…] wrote an article with James Rosindell suggesting a new open peer review system where every aspect of peer review is completely open, yet paradoxically I rarely sign reviews. My […]

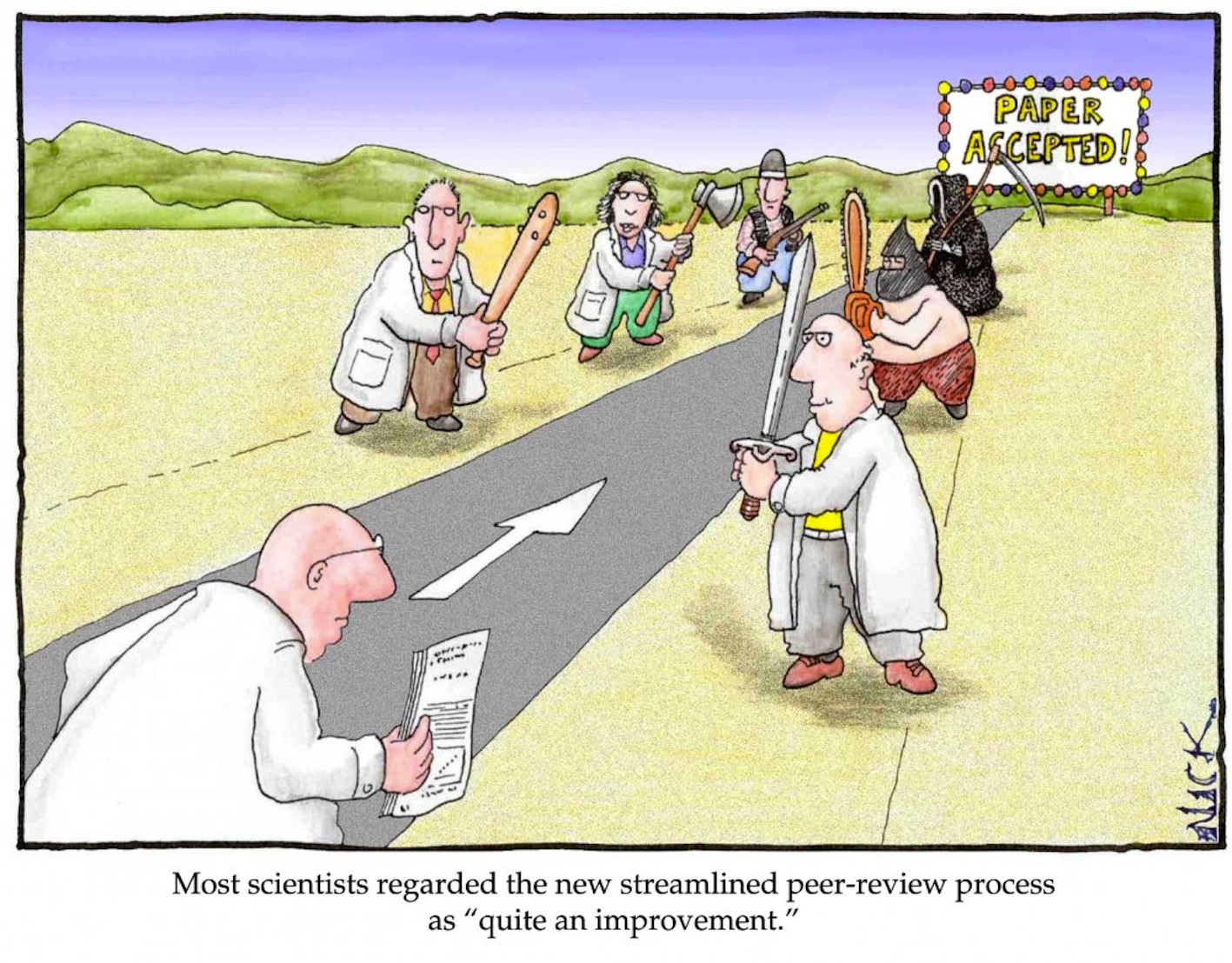

[…] Cartoon by Nick D Kim, strange-matter.net (please see site for terms of reuse) Source […]

[…] Openly Streamlining Peer Review […]