Weighing Up Anonymity and Openness in Publication Peer Review

Scientists are in a real bind when it comes to peer review. It’s hard to be objective when we’re all among the peer reviewing and peer-reviewed, or plan to be. Still, we should be able to mobilize science’s repertoire to solve our problems.

Yet, with exceptions for a few journals – most notably The BMJ – we haven’t used strong scientific methods to decide what to do about peer review for publications. We’ve largely let peer review remain in science’s blind spot.

When it comes to the potential for bias in the editorial process, that blind spot often turns into a fiercely hot one. Perhaps most of all on the question of being open with identities. Personal convictions and fears, with a study or two that reinforce them, drive strong opinions that in turn drive practice.

Determining practice based on a consensus of practitioner opinion doesn’t always end well, though, does it? Even well-intentioned interventions can cause harm – or have no real consequences at all, leaving the problems untouched. And there’s a lot that can go wrong with editorial review.

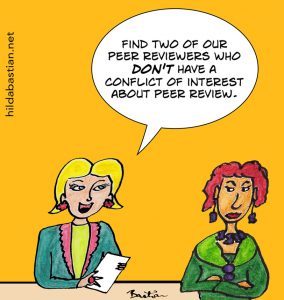

There are some consequences that flow inevitably from the choice of anonymity or naming, like workload for journals, or the ability for peer reviewer conflicts of interests unknown to editors to be revealed. I’ll come back to that later. But first, what evidence do we have that masking the identities of authors and peer reviewers achieves what it is meant to?

Well, it’s complicated. Which means it really needs a solid, up-to-date systematic review. The only systematic review, by Tom Jefferson and colleagues, has several drawbacks though. It doesn’t address fairness or bias as outcomes, for example. It’s restricted to biomedical journals, and the search for studies was done in 2004. [I’ve added a comment about it on PubMed Commons: archived here, with author reply.] [Update on 12 June 2016: A new systematic review by Rachel Bruce and colleagues addressed openness and blinding. It was more limited in scope – only randomized controlled trials and biomedical journals – and found no studies that were not included here. I added a comment on PubMed Commons, archived here, with author reply.]

So I’ve taken a deep dive into this literature. I found 17 relevant comparative studies, 12 of which are controlled trials. The quality of these studies varies greatly, especially the ability to control for variables. Some are in hypothetical situations. But there are some very good, decent-sized trials.

There is a numbered list of all the relevant studies I found, alphabetically by author, with links, at the bottom of this post. And there’s a declaration of my own interests (including close ties with The BMJ, which features prominently in this literature).

Let’s start with authors. Blinding peer reviewers to authors’ identity is one of the most commonly advocated strategies to ensure that the most deserving papers make it past the peer review gauntlet.

1. Did attempts to mask authors’ identities affect acceptance or rejection of manuscripts, or the scientific quality of peer review reports?

1. Did attempts to mask authors’ identities affect acceptance or rejection of manuscripts, or the scientific quality of peer review reports?

When it’s coupled with a policy of not revealing peer reviewers’ identities, this style is called double-blind peer review. It can mean having a cover page without the authors’ identities and affiliations, through to more detailed effort to remove identifying clues from the manuscript when it goes to the peer reviewers. (When peer reviewers’ identities only are not revealed, that’s called single-blind peer review.)

This was addressed by 6 randomized controlled trials (RCTs) (1, 5, 6, 8, 12, 13), 1 non-randomized controlled trial (2), and 1 cross-sectional study comparing citation rates of journals with different policies (10).

The non-randomized trial (2) had a number of quality issues, with a particularly large number of comparisons and no method used to test for multiplicity. The author used statistical significance testing, but without pre-specifying a level. The findings didn’t reach the conventional 5% level.

The rate of failure of blinding in the trials was high: average failure rates ranged from 46% to 73% (although in 1 journal within one of the trials it was only 10%). Even when the researchers analyzed actual blinding separately, though, the major weight of the evidence here still showed no statistically significant difference in review quality or editorial decision (5 out of 6 RCTs).

When it made a difference to peer reviewers’ reports (10, 12), the difference was small. That difference was 0.4 on a 5-point scale, where editors had pre-specified 0.5 as a difference large enough to affect editorial decision-making (12), and an average of less than 1 extra citation in the study that used citations as the measure of article quality (10).

So masking authors’ identities hasn’t been shown to make an important difference in what studies get published. We’ll come back to studies looking at the impact on bias later.

2. Did revealing peer reviewers’ identities affect acceptance or rejection, or the scientific quality and collegiality of peer review reports?

2. Did revealing peer reviewers’ identities affect acceptance or rejection, or the scientific quality and collegiality of peer review reports?

There were 4 RCTs testing the effects of revealing the peer reviewers’ identities (6, 14, 16, 17), and 1 study comparing a journal that uses single-blind peer review with one that reveals author and peer reviewer names, publishing the peer review report as part of the pre-publication history of articles accepted in the journal (9 – available in an abstract only). All of them are in biomedical journals. They cover over 1,200 peer review reports, about half of which are for The BMJ.

There was also 1 RCT testing telling peer reviewers their reviews would be posted on the internet as part of the pre-publication history of the article (15). That trial is for The BMJ too.

The trials are methodologically sound, with one exception that has fundamental flaws. Questions about the quality of that study (17) were raised in the systematic review by Jefferson and colleagues. The intervention and control groups had very different sizes (222 and 186): groups in a properly randomized trial should have a similar size. Intention-to-treat analysis wasn’t done and there’s not enough information about what happened to the drop-outs. So this study is more prone to bias than the others.

In the trials, editors did not judge the reviews as better on global scores on average. The researchers in the comparative study of open peer review judged the reports to be better.

Peer reviewers were more likely to substantiate the points they made (9, 14, 16, 17) when they knew they would be named. They were especially likely to provide extra substantiation if they were recommending an article be rejected, and they knew their report would be published if the article was accepted anyway (9, 15).

In some studies, when the reviewers knew they would be named, they were likely to be more courteous or regarded as helpful by the authors (9, 14, 17).

There’s no support here for the concern that naming peer reviewers leads to systematically less critical reviews – and some support for improvement.

There was one large effect: many peer reviewers declined the invitation to peer review when they knew there was a chance they would be named – especially when they knew their colors would be nailed to the public mast if the article was published (15).

3. Did masking identities of authors and/or peer reviews affect detectable bias against authors?

3. Did masking identities of authors and/or peer reviews affect detectable bias against authors?

This is the most complicated question. It’s also the most important one, since it seems anonymity makes no major difference to the quality of the published literature – at least not in the journals where that’s been studied.

Does revealing author and/or peer reviewer identity increase or reduce bias? There were several studies that were either set up to assess the impact of anonymity on bias, looked for signs of impact in subgroups, or compared single- and double-blind journals retrospectively. But none looked at any effect on bias of revealing peer reviewers’ identities.

There were 7 studies here, including 2 RCTs (1, 5), 2 non-randomized controlled trials (2, 11), and 1 comparison study with historical control (3). There was 1 study comparing acceptance rates between single-blinded and non-author-blinded journals (4), and 1 comparing reports in a single-blinded journal of peer reviewers who were sure of the authors’ identities anyway, those who suspected they knew, and those who truly had no idea (7).

Other than the RCTs, these studies did not include good methods to control for confounding. Some had serious methodological flaws. So conclusions based on the non-RCTs here are going to be shaky.

There was no difference between acceptance rates for manuscripts from the US versus other countries in 1 RCT that measured this outcome (1). The other RCT looked at whether or not seniority affected outcome in a trial of naming versus blinding authors (5). It found that manuscripts by more experienced scientists were rated more highly by everyone, and even more highly when authorship was blinded.

Out of the non-randomized studies, 1 found reviewers were less favorable to articles when they had no idea who they were (7). Gender of the peer reviewers made no difference. (No analysis was apparently done on gender of the authors.)

The other 4 non-randomized studies examined gender by looking at knowledge of authors’ names with one or more women among them. There’s no consistency of how blinding was done in journals in these studies, nor how gender was determined (such as any author, lead author, or corresponding author).

The methodological weakness of 1 of these was already discussed above (2). Blinding authors didn’t make a difference to the acceptance rate of manuscripts with women authors in that study. But males were more likely to recommend rejection of manuscripts with women authors, and vice versa. In another (11), women reviewers were more likely to reject men’s manuscripts, but not vice versa.

The last 2 both found an association between journal policy of blinding authors and the rate of publications with women authors (3, 4).

The first of these had a historical control, looking at rates before and after a journal changed its policy, but could only look at publications, not at acceptance rates (3). The researchers gathered data for comparable journals in that field for the same period, but didn’t do detailed analyses of the available variables or consider the risks of multiple testing.

Other authors re-analyzed the data from that study, and found no interaction effect between the change of policy and the increased rate of publications with women authors [PDF]. It appears the rate was increasing in those fields anyway.

The other looked at rates of acceptance of articles submitted to 12 economics journals between 1978 and 1979, although not the whole period for each of them (4). Half had single-blind reviewing, half double, but this was coincidental: they were the 12 responding to a request for data from 36 journals. Manuscripts with one or more female authors were more likely to be accepted at a journal with a policy of double-blind peer review in that study.

There was 1 other controlled study on the question of gender bias, which involved students (including graduate students) rather than peer reviewers from a journal. So I didn’t include it on my list, or review its quality thoroughly. (It found no evidence suggesting gender bias.)

There’s not much help in this group of studies. We would need much stronger evidence to draw conclusions about the effect of author blinding on bias. Based on these studies, a policy of blinding authors could have a benefit, a detrimental effect, or no effect on gender bias.

So with that knowledge from studies, how does revealing identities stack up against anonymity and attempting to blind authorship?

So with that knowledge from studies, how does revealing identities stack up against anonymity and attempting to blind authorship?

I think institutionalizing anonymity in publication peer review is probably going out on a limb. It’s only partially successful at hiding authors’ identities, and mostly only when people in their field don’t know what authors have been working on. If blinding authors was a powerful mechanism, that would be evident by now.

Author and peer reviewer anonymity haven’t been shown to have an overall benefit, and they may cause harm. Part of the potential for harm is if journals act as though it’s a sufficiently effective mechanism to prevent bias.

That said, we don’t have an overwhelming evidence basis for anything. In my opinion, the weight tilts in one direction at the moment, with a trade-off of potential harms we don’t know enough about. This ongoing knowledge gap is letting science and scientists down.

Although there are dozens of journals in these studies, the strongest evidence comes from a relatively small number. Collegiality, competitiveness, and discrimination are going to vary from one journal’s community to another. While there is reassurance about systemic gender bias in peer review since the 1990s (for example here, here, and here), that certainly doesn’t mean it never happens.

At some prominent science journals, odds are stacked against women (for example, Nature and Science). And as Fiona Ingleby recently showed from her experience at PLOS One, there are individuals out there who are still comfortable with extreme levels of sexism. Journals need to monitor editor dynamics deeply to be sure there isn’t a problem. (See for example JAMA’s self-analysis).

There are clear signs of other biases that have been shown at some journals. Stand-outs are editors’ personal connections, institutional prestige, as well as a US and English language advantage internationally. Science has a status bias problem.

The last thing we need, though, is for people to avoid submitting articles because of fear of bias or aggressive peer review. Under-representation in article submission could be a bigger problem than bias from peer reviewers.

A lot of scientists are concerned that there’s widespread fear of retaliation for writing critical peer review reports about the work of people with more status than them. There’s no doubt that happens. But then, even who you cite or don’t cite in an article or anywhere else can come back and bite you.

Building a reputation and increasing your network of collaborators is critical to success in science, too – and that can come from being visible and critical. What is science about, if not applying your intellect critically? But early career researchers have to make their decisions about anonymity without the benefit of good scientific evidence on the balance of potential benefits and harms and wider impact on their careers.

Some journals offer authors the option to opt-in to double-blind reviewing. That doesn’t seem to have been evaluated in a published study. Although double-blind peer review has a lot of advocates, that doesn’t mean a lot of people want to do it. Nature reports that only 20% of authors take the option. It might protect them from bias, but this is unknown territory. Hopefully, choosing it doesn’t itself become a cause of bias.

On the other hand, the anonymity of peer review reports definitely enables negative, and even egregious, behavior – without accountability. Revealing who’s peer reviewing can also reveal conflicts of interests of which editors are either unaware or perhaps even allow without informing the authors. Accountability increases the care and effort many people would put into a peer review.

One of the arguments in favor of allowing anonymity, is that people aware of scientific misconduct in a manuscript won’t put that in writing unless they can be anonymous. I’m not sure what the chances are of just that person being chosen to be the peer reviewer, even though it’s important when it does happen. Blinded peer review has never been a good mechanism for preventing misconducted research getting into the literature, though, so it’s hard to weigh this up against common issues.

Misconduct occurs in peer review too. Opening the black box might give others a chance to detect issues like a large peer review “ring” that continued undetected for too long, and the kinds of peer review abuse investigated by COPE (the Committee on Publication Ethics). An open system allows researchers to investigate a range of other issues, such as this study by Sally Hopewell and colleagues of the impact on the quality of reports of clinical trials.

Substantiating our statements, and being accountable for what we say and how we say it when we are gatekeepers for publication, is decisive for me. That’s all the more important for people whose work or critique loses out because of status bias, and those who may be repelled from publishing and science by reviewer aggressiveness.

There are scientific communities where open collaboration, articulating critique well and accepting it too, are not only the cultural norm, they’re what propel successful careers. That needs to spread widely. I think it will happen one scholarly community and journal at a time. However the rest of science catches up, I doubt it can happen behind closed curtains.

Update: By October 2017, and digging more into research on bias in journals, I shifted to a stronger position. I posted about that here: The Fractured Logic of Blinded Peer Review in Journals. And later, Signing Critical Peer Review & the Fear of Retaliation: What Should We Do?

~~~~

If you’re interested in the history of peer review, check out my previous post, Peer Review BC (Before Citations) and these overviews from Drummond Rennie and Dale Benos and colleagues.

If you’re interested in the history of peer review, check out my previous post, Peer Review BC (Before Citations) and these overviews from Drummond Rennie and Dale Benos and colleagues.

The cartoons are my own (CC-NC license). (More at Statistically Funny and on Tumblr.)

Declarations: A part of my day job is responsibility for PubMed Commons, a forum for open, signed, post-publication commenting. I am currently an academic editor for PLOS Medicine and on the human research ethics advisory group for PLOS One. I’ve had editorial roles with other journals in the past, including part-time professional lead editing.

As I mention The BMJ’s research and policy favorably in this post, I note that I have close ties with this journal. I was a member of their ethics committee for several years (including regular supported travel) and participated in advising on some special issues of the journal. I recently traveled to speak at Evidence Live with their support, and I’ve published multiple articles with them (see this search, and a further two invited commentaries: this and at the foot of this). I contributed a chapter to their book, Peer Review in Health Sciences (2nd edition, 2003, edited by Tom Jefferson and Fiona Godlee).

Alphabetical list of comparative studies comparing on revealing and not revealing author/peer reviewer identities:

Note: Quite a few of these have academic spin, especially in the abstracts.

The study is done in biomedical publication unless identified “Non-biomed”.

- Alam (2011): RCT (randomized controlled trial).

- Blank (1991): Non-randomized controlled trial. Non-biomed.

- Budden (2008): Comparative study. Non-biomed. [PDF]

- Ferber (1980): Comparative study. Non-biomed.

- Fisher (1994): RCT.

- Godlee (1998): RCT.

- Isenberg (2009): Comparative study.

- Justice (1998): RCT.

- Kowalczuk (2013): Comparative study. (Abstract only.)

- Laband (1994): Comparative (cross-sectional) study.

- Lloyd (1990): Controlled trial, method of allocation to the groups unclear. Non-biomed.

- McNutt (1990): RCT.

- Van Rooyen (1998): RCT.

- Van Rooyen (1999): RCT.

- Van Rooyen (2010): RCT.

- Vinther (2012): RCT.

- Walsh (2000): RCT.

(The controlled trial I “excluded” because the reviewers weren’t associated with a journal was Borsuk (2009).)

* The thoughts Hilda Bastian expresses here at Absolutely Maybe are personal, and do not necessarily reflect the views of the National Institutes of Health or the U.S. Department of Health and Human Services.

Thanks for this very useful list. I’m largely with Richard Smith see http://www.timeshighereducation.co.uk/news/slay-peer-review-sacred-cow-says-former-bmj-chief/2019812.article ). Pre-publication peer review just isn’t working, except perhaps in a small number of specialist journals. Post-publication review has had a slow start, but it’s now taking off rapidly. My guess is that will replace pre-publication review before long (though I’ve no idea how long it will take): see http://www.theguardian.com/science/head-quarters/2015/may/12/will-traditional-science-journals-disappear#comment-52047194

This is a great article, but the floating panel of little icons for Facebook, Twitter etc. makes it very hard to read. In every view they obscure the first few letters of 9 or 10 lines of the text at the top left.

None of the other PLOS blogs I just looked at have this problem, so maybe it’s just a setting you could change.

Thanks for the heads up (and the compliment). I removed it, which I hope solved the problem)!

Thanks for this excellent summary. For number 2, it’s possible that asking reviewers to reveal their identities doesn’t make them write better reviews, rather it filters scientists who are better reviewers (or a combination of both).

Thanks, Adrian. On the “chicken and egg” of named reviewers writing better reviews because they’re better reviewers. While that’s certainly possible, this was accounted for by randomization. Reviewers who wouldn’t entertain the possibility of being named, wouldn’t be expected to participate in a trial like that. For the trials, reviewers all signed up knowing they could be assigned to the “open” group. For example in (15), which I cited for the “especially” statement, although 55% of the reviewers approached declined to participate in peer reviewing the manuscript because it was part of the trial of open reviewing, “No reviewers who had agreed to participate subsequently declined after the group allocation was revealed”. Those assigned to the open group, then spent an average of 25 minutes longer on their reviews.

Great to see such a thorough analysis of the literature.

I’ don’t agree with your conclusions, though, regarding author blinding.Surely the discussion we need to be having is whether research should be judged purely on the merits of what is reported, or whether knowledge of the author should be part of what we are judging. . Personally, I think we should be judging just the research itself, in which case we should blind author identity – after all, it’s easy enough to do. I think the issue here is that the default has been to have identifiable authors, and research you review is asking whether changing that makes a difference: as you note, the findings, such as they are, are mixed, but the evidence is not particularly impressive. I would argue that the default should be blinded authors, and if someone wanted to make a case for revealing the authors they would have to prove it led to better decision making. I think that would be a hard case to make for several reasons.

First, if we take author identity into account, then there will be authors a reviewer knows and those they don’t know. For those who are not known, the very fact they are unknown may count against them. For those who are known, it gets more interesting. It’s often assumed that fame will boost the appraisal of someone’s work, but it can cut both ways. I have a low opinion of some of the famous people in my area. However, I try very hard to be objective and not allow any prior prejudices cloud my judgement if I get their work to review. But I’d far rather not know the author. Yes, I may be able to guess the research group the paper comes from, but I won’t know if the work is from a graduate student or the PI. I aim to write reviews constructively in any case, but there have been instances when blinding has helped me do this, where the author turns out to be someone I have views about.

There’s also the question of prejudice on the basis of gender or ethnicity. We know from other research that such effects happen in many areas of evaluation – I appreciate the evidence from your review was not strong on this point, but I think you’d need much bigger studies to show any effect, because it would be small relative to other factors. As Virginia Valian pointed out, a cumulation of small effects can end up leading to big differences over time in an individual’s career.

I think the issues on blinded peer review are completely different and agree with you that anonymising reviewers produces a range of problems that probably outweigh any benefits. But from what we currently know about blinding of authors, I’d argue that both logical and cost-benefit considerations favour treating blinding of authors as the default, not as the exception.

Thanks, Dorothy. I agree we really need better research on the question of whether it reduces or adds to bias, because at the moment, it seems it really could go either way.

Maybe it depends on the area, too. I saw some arguments showing why there are times you really need to know whether the facilities involved had adequate capacity. I’m traveling at the moment, but when I’m back I’ll add a link to a discussion about that.

I can’t remember the last time I reviewed something where there was an attempt to blind the authors, and it was never common for me. While I can see an argument that the onus is on those arguing for a change in practice to prove a change would be beneficial, I’m not sure there really is a standard. In some of the papers I read just assessing practice, there were disciplines where the journals were pretty evenly split, some where blinding was more common, others where blinding was less common, and now there’s opt-in journals, too. I don’t know how it ends up across the board for manuscripts submitted as opposed to number of journals. Your point can definitely apply to individual journals, or individual scholarly communities, though.

I think it also probably differs in areas where conflicts of interest are more critical and common, and what kinds of papers we’re talking about. When it’s more essay-like, or a review of the literature on adverse effects of a drug, I want to know if the authors are from the manufacturers of the drug (or its competitor). There’s particular diligence needed on known sources of bias to the literature such as that. (Added shortly after posting: here’s a piece I wrote on author/sponsor bias in clinical research.)

I agree about the mountain of bias in a person’s life and career is made up of molehills, so the molehills matter. But I also think that given how poorly blinding performs, we need far better ways of addressing bias in editorial peer review than masking of authors. After all, the authors are not blinded to the editors, and they’re choosing the reviewers and making the decision. There’s varying concordance of editorial decisions with peer reviewers’ reports, and indeed, of reviewers’ recommendations with each other. The biggest danger in focusing on blinding of authors to reviewers as much as we have done, is that it has kept the focus away from editors.

It’s a bit hard in mathematics and physics where the papers have been posted on the arXiv, for instance, well in advance of referees getting given the papers. And people who are engaged with the literature will have seen the paper already and no doubt formed a first impression.

Post review won’t take off before there is some systematic incentive for scientists to review other papers. Right now, the incentive for being a reviewer aside from altruistic reasons (helping friends, working for the benefit of science as a whole), has the incentive that scientists earn prestige by being on editorial boards/list as a reviewer.

So some kind of systematic review incentive must be made. Perhaps a collective where a large group of researchers take turns to review each others’ papers. They would then earn some kind of currency which they could use to get other researchers to review their articles. A system like this would however not be so easily compatible with the observed power law of number of scientific publications by researchers. The most prolific authors would not gain enough review credit to spend on making others’ review their papers.

Ideas?

I would start by saying that I am not a researcher nor involved in peer reviews but that the topic often comes up in discussions with academic colleagues, followed shortly after wards by discussion around how difficult it is to overcome.

I wonder if , given the inconclusive results of this review, and the desire to blind review despite the lack of clear evidence of benefit to date, we need a slightly different approach which is more reviewer based that process based.

Motivating reviewers to actively manage their biases and giving them tools to help with this may support wider attempts such as blind review. This requires us to articulate both a moral but also a business case for trying to mitigate bias in our reviews.