Network meta-analysis (NMA) is an extension of meta-analysis, combining trial evidence for more than 2 treatments or other interventions. This type of…

Evidence Live and Kicking (Part 1)

“Evidence based medicine: a movement in crisis?”

“Evidence based medicine: a movement in crisis?”

That 2014 editorial by Trisha Greenhalgh and colleagues echoed through the hallways leading up to this year’s Evidence Live conference, on now at Oxford University.

Day 1 down, and the question is well and truly answered. The “EBM movement” is facing solid bombardment – and about time, too, I reckon. But the discussion of scientific evidence itself, and its role in medical practice and healthcare decision making – that’s livelier than ever.

I haven’t seen seriously awesome statistician and clinical trial expert Richard Peto give a talk in a long time. He got the day started like a McLaren launching out of the grid at a Formula 1 race.

There’s no understatement in Peto’s advocacy of big trials. “This was just human sacrifice,” he said of the use of magnesium infusion for heart attacks. The treatment was used because small trials gave a misleading impression of benefit, overturned by a single massive trial.

Here’s the slide he was talking about. It shows a forest plot (I explain those in my quick primer on meta-analyses.) Peto also pointed to an example of the reverse. It’s a tough one, though, isn’t it? Incredible Hulk trials can’t answer all the important questions we have about treatments, and it’s not as though they can truly eliminate all bias.

“Virtually all subgroup analyses are rubbish”: you should be wary, Peto said, of any finding from a subgroup when the overall effect of a treatment isn’t strongly shown. When a medical journal demanded subgroup analyses from one of their trials, Peto gave them analyses by astrological signs to make his point – with 12 signs, he pointed out, the odds are always good you’ll strike gold with at least one of them! If you don’t know about that analysis, you can read about it here. (An explanation from me on the risks of trawling for subgroups here.)

Patrick Bossuyt kept up the pace, tackling the issue of diagnostic tests – one of the particularly complex areas to evaluate. How do you decide if a diagnostic worthwhile? By analyzing the diagnostic accuracy of a test, or by running standard trials to find out the longterm consequences on the health problems the test is meant to improve?

Bossuyt showed how much accuracy of a test varies according to context – and how hard it is to study the consequences of using them, too. The way forward now? He’s concentrating on exploring the reliability of tests of diagnostic accuracy together with linked evidence. Linked evidence pieces together a chain of inferences from different studies that look at the problem from different angles.

Bonus points to Bossuyt for the urban myth of us having the Beatles to thank for the CT scan, most entertainingly told. The CT scan was developed by EMI, awash in profits from sales of Beatles records. The fact that ruins this great story? British tax payers kicked in far more money than EMI did.

Another stellar performance came from Emily Sena, from CAMARADES, the group spear-heading systematic reviews and meta-analyses of preclinical animal studies.

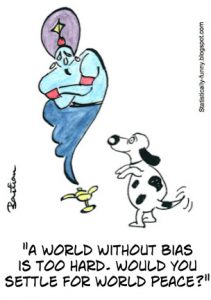

Sena spoke of the many reasons for translational failure – why apparent benefits from animal studies so often don’t pan out in humans. A common reason is that they didn’t really pan out in the animals, either, but experiments were badly done, not published, or overlooked. (More on this at Statistically Funny.)

The goal of using fewer animals in experiments, Sena said, has added to the problem of under-powered studies: we need fewer, but better, animal experiments. She added a new one for me to the annals of all the many ways to do a study badly: giving animals the treatment before giving them the disease!

Not that seeing when a study is done well or badly is easy. That was clear from the work presented by Gerald Gartlehner. One method for assessing the quality and strength of the evidence that is widely used these days is GRADE. (Disclosure: I was a member of the GRADE Working Group for a few years.)

Gartlehner’s data came from an evaluation of 160 bodies of evidence “GRADE’d” by professional systematic reviewers. What was the predictive value of evidence getting the top strength of evidence rating? “Disappointing,” Gartlehner said. Not much better than a coin toss. We don’t have to go back 15 years and start again, he argued, but we do have a long way to go.

This was a key part of my talk on Monday. And it’s a subject I wrote on here at Absolutely Maybe a few weeks ago, too. We are nowhere near as good at interpreting and communicating the results of clinical research as we’d like to think.

Worse, cognitive biases, intellectual conflicts of interest, and the commodification of “EBM” are doing a lot of harm. After all, a systematic review is a descriptive study that itself is highly prone to bias. But more on that later. Day 2 is starting….

Part 2: Rifts and Bright Spots in Evidence-Based Medicine.

Live tweets at #EvidenceLive.

~~~~

The photos in this post were taken by me at Evidence Live in Oxford on 13 April 2015. The cartoons are my own (CC-NC license). (More at Statistically Funny.)

Disclosure statement: I was invited to speak at Evidence Live, and my participation was supported by the organizers, a partnership between The BMJ and the Centre for Evidence-Based Medicine at the University of Oxford’s Nuffield Department of Primary Care Health Sciences.

* The thoughts Hilda Bastian expresses here at Absolutely Maybe are personal, and do not necessarily reflect the views of the National Institutes of Health or the U.S. Department of Health and Human Services.

Hilda

Fabulous post. I am so looking forward to Part II, in which I am hopeful that we will broach the subject of bias from a human perspective, as in “who is on the panel assessing the evidence”? What framing are they using to cast the EBM net? Who selects the panel and are patients involved in the determination of who sits on the panel? Are meta-analysis and systematic reviews of under-powered trials useful? And, why are we–who care about bias so much–not focusing on the human element? Love your work!

Thanks, Lorraine! Those are all important questions – some of them are touched on a little in part 2: Rifts and bright spots. When the filmed plenaries go online, catch Iona Heath’s talks: she gave fabulous talks on the human element of medical care.

Great work Hilda. Wish I could be there. Bit scary really how easily EBM can be subverted by both unwitting enthusiasts and people with vested interests.

Thanks, Cindy! It’s in the nature of both medicine and social movements, I think, to have particularly acute problems of self-interest and abuse of power. “EBM” could have been better, if it had applied its own principles to itself as a movement. It’s interesting, that this didn’t happen, that a movement that sees itself as self-critical, has been able to maintain such major sociopolitical blind spots and pay so little attention to the adverse effects of what it does. It’s wonderful that Evidence Live deliberately creates a space that enables the sociopolitical critique to occur within a movement space. That’s a great development.